In a recent blog post I mentioned I would write a post about what computer science aspects would mean for Enterprise Asset Management.

By coincidence, I recently proposed a framework to describe the different types of computer models that can be used by businesses, especially from an Asset Management perspective.

So as well as meeting that promise, I’m also keen on getting feedback from the wider asset management and infrastructure management community on this framework – if you have any thoughts, please do get in touch via LinkedIn here or through this blog here .

TL;DR

Data models - hold and serve data

Information models - put data into context

Optimisation models - find solutions to problems

Generative models - make up data from existing data

Short story long

All businesses use models. The computer revolution means that Microsoft applications like Excel or Power BI are ubiquitous. Finance use models to help with things like management accounts or tax liabilities. HR use models to understand things like absence or performance. And Asset Managers use models to understand things like which asset interventions are required and when.

The following framework attempts to differentiate between these models in terms of their complexity or “evolution”. This is important, as understanding the form of output that each type of model can achieve, can help frame what kind of model might be needed for a specific business requirement.

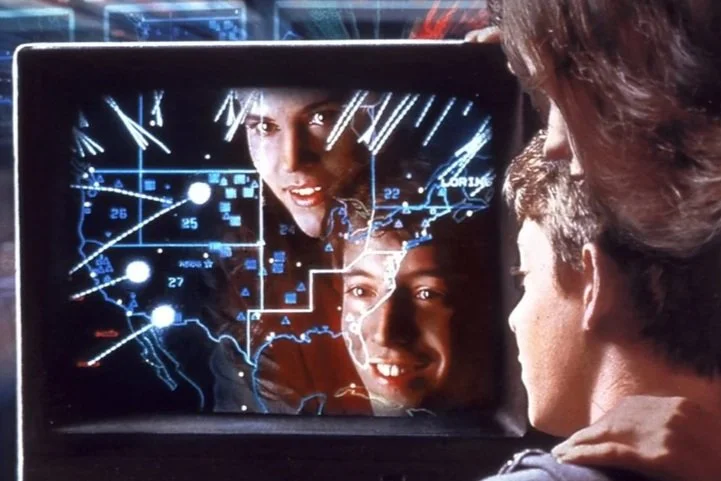

The framework is summarised in the following diagram. Below that, I’ve tried to add further context and detail.

Data Model

As discussed, all asset owners have some level of data models. Data models are about understanding available data, and while this isn’t always as sophisticated as the headline grabbing capabilities of a generative model like ChatGPT, data models are really important to the functioning of any organisation or business (or society in general).

A slightly more formal definition of a data model would be “a conceptual representation of data and how it is organized and structured within a database or information system.”

Data models can be represented using various notations, including Entity-Relationship Diagrams (ERDs), UML (Unified Modelling Language) diagrams, and textual descriptions. Populating and using these notations is a bit of a specialism!

Point being, a data model defines the way data is stored, accessed, and manipulated, so that the data can be interrogated.

Information Model

Information models are about putting data into context to create meaningful insights.

A more formal definition on an information model would “be a conceptual framework that defines the structure, organization, and semantics of information within a particular domain or context.”

Information models serve as a bridge between the abstract representation of data and the technical implementation in databases or information systems.

Generally, information models are built from existing data models. It’s typical for organisations to have some information models, however the extent of connectivity between those models to create the context is usually where the challenge exists.

An important difference between a data model and information model, is that an information model provides a high-level representation of data and how it is related, without getting into the specific technical details of how data is stored or processed.

A key attribute of an information model is the relationships that define how entities (the specific data) are connected or related to each other. These connections show how information flows or interacts within the model. Relationships can be one-to-one, one-to-many, or many-to-many, depending on the nature of the connections.

Another key attribute is the information model semantics, which describe the meaning of the data to help understand the significance of data elements and their relationships.

Optimisation Model

This is about making more connections between datasets and insights than could be done manually and using heuristic models as an equivalent of modelling every possible scenario to find the best choice.

A more formal definition of an optimisation model is “a mathematical or computational representation of a problem designed to find the best solution among a set of possible solutions.”

The models are used to systematically analyse, evaluate, and make decisions regarding various factors or variables while aiming to maximise or minimise a specific objective or set of objectives, often referred to as the objective function.

The objective function quantifies the goal that needs to be optimised and defines the logical relationship between the decision variables and the objective. For example, this could be “to minimise cost of asset interventions”.

Something that can sometime cause confusion, is that we often talk about minimising objective functions as minimising the “cost”. This isn’t a financial cost but is a way of describing the value of an objective function, where we might use penalties for outcomes we don’t want and rewards for outcomes we do want. If that didn’t make sense (I’ve witnessed many confused conversations between computer scientists, engineers, and accountants over the years) don’t worry – the point is just that with an optimisation model ‘minimising cost’ probably isn’t talking about money.

Another attribute of optimisation models worth discussing is decision variables: These are the specific data that can be controlled or adjusted to influence the outcome of the problem being optimised. Decision variables represent the choices or decisions that need to be made. For example, in Asset Management Whole Life Cost Optimisation, these variables would be things like volume of maintenance vs volume of renewals.

The last attribute to discuss (although there are many more) would be constraints. Constraints are mathematical expressions that limit the values of the decision variables (and therefore constrain the optimisation model. A good way to think about this, would be optimising a railway passengers’ journey. The optimum journey would cost £0, would have zero safety issues, and would take zero seconds. Obviously, this is impossible – the constraints are what make the solutions considered possible solutions. While input data is intuitively required for any model, it is especially important for any optimisation to have enough data to constrain the model to reality (or at least close enough to reality to be useful).

At the core of any optimisation model, is the Optimisation Algorithm(s). To find the optimal solution, the model will rely on one (or usually many) computational algorithms. There are many, and people are coming up with new ideas and ways of optimising all the time:

Enumeration: For simple problems you can just calculate every possible solution. The first question should always be – do I actually need to optimise or can I make the problem small enough to enumerate!

Random Search: An optimisation method that explores the solution space by randomly sampling points, often used when the search space is poorly understood. Quite good for an initial exploration for theoretical or intangible problems.

Gradient Descent: An iterative algorithm used to find the minimum of a function by adjusting model parameters in the direction of steepest descent of the gradient. Can be quite fast, but in my (admittedly out of date) experience can get stuck in local optima (rather than finding the global optimum)

Hill Climbing: A simple optimisation algorithm that iteratively makes small modifications to a solution to find an optimal or locally optimal solution. While this is generally a poor way to find the global optimum this can be quite useful for optimising from known scenarios towards a local optimum. For example, in asset management it’s typical to manually produce a small set of future asset management intervention scenarios – something like (1) following manufacturers recommendations, (2) early renewal, (3) high maintenance, (4) low maintenance. A Hill Climbing algorithm could offer slightly improved scenarios from those “base” scenarios that are likely to be fairly sensible.

Genetic Algorithms: (this was my jam at uni) A search heuristic inspired by the process of natural selection, where populations of potential solutions evolve over generations to find optimal or near-optimal solutions. The really cool thing about this, is the cross over and mutation aspect means that you can find solutions from across the whole solution space.

Differential Evolution: An evolutionary algorithm that uses the difference between candidate solutions to create new ones in the search for optimal solutions.

Simulated Annealing: A probabilistic optimisation technique inspired by the annealing process in metallurgy, used to find the global minimum of a function by accepting occasional uphill moves with decreasing probability.

Particle Swarm Optimization (PSO): An algorithm that simulates the social behaviour of birds or fish, where particles in the search space move towards the best-known solutions.

Ant Colony Optimization (ACO): An optimization algorithm inspired by the foraging behaviour of ants, where artificial ants explore a solution space and leave pheromone trails to find the optimal path.

Tabu Search: An iterative optimization algorithm that maintains a short-term memory of visited solutions and uses taboo lists to avoid revisiting previously explored areas.

Linear Programming: A mathematical technique for finding the optimal solution to a linear objective function subject to linear inequality constraints (limited use as a primary optimiser, but quite helpful within a model, especially for a discrete or variable set within a model – for example this could be used to optimise a specific node within an artificial neural network).

Integer Programming: Similar to linear programming, but it deals with discrete decision variables rather than continuous ones, often used in combinatorial optimisation problems (can be quite coarse but very useful to speed up working through known sections of the solution space where you don’t want to waste computational effort).

Quadratic Programming: A mathematical optimisation technique for solving problems with quadratic objective functions and linear constraints (quite a nice solution where there are harmonics within the dataset, although you generally would need lots of experience with the problem and using optimisation to know the timing to apply this).

Dynamic Programming: An optimisation approach that breaks down a complex problem into smaller subproblems and solves them recursively, often used in sequential decision-making problems.

Ultimately, optimisation models provide a systematic and quantitative approach to decision-making by identifying the best course of action given a set of constraints and objectives. There are certainly specific areas where optimisation is being applied (for example pipe network optimisation, railway track rail wear models). The main limitation to date has been having adequate volume of data of sufficient quality to train the models.

Generative Model

Generative models repeatedly model scenarios to train an “artificial intelligence” that uses learned patterns and connections to generate new data or content to form part of the insights provided. The point here is that they have a form of intelligence in that they’re generating new data that is similar to, and often indistinguishable from the data that they have been trained on.

It’s worth noting that these models have gained significant attention in recent years due to their ability to create realistic and creative content, such as text, images, music, and more. That said the whole “intelligence” aspect is a bit thin – there is no free will and - as of yet - no models are able to escape the paradigm of their training data. Basically, new material isn’t created it is generated. There’s a philosophical point about perceived creativity: If I think, therefore I am, then if I think that you think, are you? I digress…

Generative AI models are typically based on deep learning techniques: that is, artificial neural networks with huge amounts of training data). I think that’s why we like to think of them as intelligent, because the core learning network is similar to a biological function.

However, there is so much to talk about here, I might do another post on how artificial intelligence works and how it is applied in engineering systems.

Something that is worth mentioning is the ethical and legal aspects:

Firstly, training these models requires available data and ownership of that data is quite interesting. The actual models are like black boxes, where they’re so complex (and often constantly updating themselves) we can only ever take a snapshot in time of what the algorithm is doing, and even then they’re built from pure logical functions without semantic meaning. If I walk around an art gallery, get inspired and create some art – did I take something from the original artists? Or more to the point, did I take something that someone owned?

The second big aspect is that the training data comes from an imperfect world and so is inherently biased by it’s training set. I was going to go into lots of detail, but this post is already quite long. Main point here is that there have already been examples of models reinforcing racial and gender bias’s (which catch headlines) but those bias’s will also effect other things. For example, imagine building a whole life cost model using a training data set from organisation A, that training data will include the historic organisational attitudes to risk of organisation A. When applied to organisation B, that bias could lead to outputs outside of organisation B’s risk tollerance.

Anyways - the point here, is not to dwell on the challenges related to the ethics of generative models, but to acknowledge that we need to face into the risks as well as the exciting opportunities.

Reflecting on how models have been applied within infrastructure asset management, these applications have largely been held back by the lack of available training data. What’s really interesting is the opportunity to use generative models to make up for this shortfall in data (as would be required for a standard optimisation model) and instead use massive volumes of data from multiple sources (like the open internet and the closed intranets of companies) to build intelligence that could help to translate poor quality and incomplete data into useful data for future modelling.

Future Models!

That last point touches on specific project I’m part of. Building on some strategies we’ve developed for applied modelling for asset management use cases, I’m looking to run some pilots with real world data. So please do get in touch if this is of interest!

Additional Notes

Models are Models

Something always worth repeating is that a model really is a just model. It’s limited by assumptions and simplifications – without getting too philosophical, it’s not actually possible to truly replicate the thing being modelled – even if we completely duplicated the thing being modelled, the foundational elements will always be different. Taken to extremis this can transition from mathematics (logic) to physics (quantum building blocks).

Optimisation is a defined term.

Another side rant, and I know it’s probably not normal, but I do get quite wound up about people using the word “optimisation” incorrectly. Comparing a couple of scenarios is ranked selection: “Out of these 5 scenarios which one is better”. Optimisation is estimating every possible solution, to understand not better, but the best. One way of doing this is enumeration (calculating every possible scenario) but even simple problems can have a massive number of solutions beyond human (or computational) ability to calculate. This is where heuristics comes in – trying to demonstrate you’ve done the equivalent of an enumeration calculation but by reducing the number of calculations to something achievable.

P.S.

If you liked this article, the best compliment you could give me would be to share it with someone who you think would like it!